Big Data and Hadoop Course

Licensed IT Training Institute In New Hampshire, USA

Trusted by Global Tech Leaders

Get certified through this program backed by leading technology partners and delivered by experienced professionals from the industry.

Big Data and Hadoop Course Overview

The Big Data and Hadoop Training program focuses on how to manage voluminous data by making the best use of Big Data and Hadoop concepts together. This course includes core Big Data topics, in-depth exposure to the Hadoop ecosystem, and real-world implementation labs to prepare you for real-time data engineering roles.

Big Data and Hadoop Key Features

Skills Covered

Connect Your

Learning Advisor Today

Benefits

Boost your career with Big Data & Hadoop! The global big data market is booming, projected to reach $401.2B by 2028 with a 12.7% CAGR. Hadoop is key, expected to hit $842.25B by 2030 at a 37.4% CAGR. Demand for skilled professionals is soaring across industries.

Big Data & Hadoop Trends Industry Growth

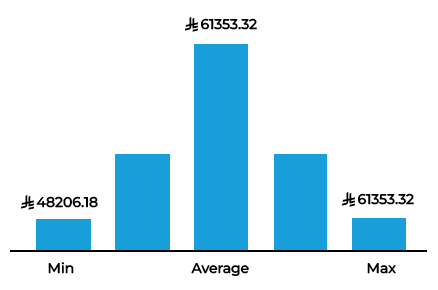

Software Developer Annual Salary

Top Companies Hiring Globally Hiring Companies

Training Options

Online Bootcamp

Flexi Pass Enabled: Flexibility to reschedule your cohort within first 90 days of access. 90 days of flexible access to online classes.

- Live Instructor-Led Training

- Hands-On Labs & Practice Exercises

- Access to Recorded Sessions

- Official Learning Materials

- Doubt Clearing & Q&A Sessions

- Mock Tests & Certification Prep

- Flexible Batch Timings

- Post-Training Support

Corporate Training

Transform your talent. Provide comprehensive training to upskill current employees or reskill them for new roles.

Detailed Curriculum

Big Data and Hadoop

Lesson 1: Java Basics, Cloudera Quickstart VM

Lesson 2: Introduction to Big Data

Lesson 3: Technologies for Handling Big Data (Distributed Computing, Hadoop, HDFS, MapReduce) Lesson 4: Hadoop Ecosystem – HDFS Architecture

Lesson 5: Hadoop Ecosystem – YARN, HBase

Lesson 6: Pig, Pig Latin, Hive, Sqoop

Lesson 7–18:

- MapReduce Implementation Labs

- Pig Operators and Functions Labs

- Hive Implementation Labs

- HBase & NoSQL Data Modeling

- SQOOP & MySQL Integration

- Apache Flume for Real-time Data

About the Course

This course helps learners understand how to process large-scale data efficiently using Hadoop. It involves practical training on tools like Hive, Pig, Sqoop, HBase, and more.

Why Pursue This Field:

Big Data is the foundation of digital transformation. Mastering Hadoop opens doors to high-demand roles in data engineering and analytics.

Course Learning Objectives:

- Learn to manage and analyze big datasets

- Master the Hadoop ecosystem components

- Implement data processing tasks using MapReduce, Hive, Pig, etc.

Career Paths After Certification:

- Big Data Developer

- Data Engineer

- Hadoop Administrator

- ETL Developer

Tools Covered In This Course

Turn Knowledge into Action with Industry Projects

Foundational Project:

• Execute MapReduce jobs • Design and run Hive queries

Intermediate Project:

• Integrate MySQL with Hadoop via Sqoop • Stream data using Apache Flume

Advanced Project:

• Model NoSQL data using HBase

Get Certified

Stand Out with an Industry-Ready Certification

Earn your Industry-Ready Certificate (IRC) by completing your projects and clearing the pre-placement assessment

Certification Program Advantage

- Lifetime access to course materials

- Instructor support for doubt clearing

- Access to future batch sessions

- Career guidance for job placement and interview prep

Placement Assistance

Unique System Skills Learners will be provided with 360-degree career guidance & Placement Assistance

Placement Assistance Process

Enroll in the course

Complete online training sessions

Learn to Build a projects

Practice with mock interviews

Interview

Preparation

What Our Learner’s Say

"Outstanding course for Big Data beginners! Instructors broke down Hadoop and Spark with real-world examples. Hands-on labs and the capstone project were key—I landed a data engineer role post-training. Fast-paced but worth it!"

"Career-changing experience! Mastered Hadoop and Spark with practical GCC-focused insights. The job assistance (résumé help, mock interviews) led to a promotion. Perfect for upskilling in Saudi Arabia’s tech sector."

"Great mix of theory and practice. Hadoop sessions were intense but rewarding. Networking with GCC peers and local job leads were highlights. Slightly more Kafka depth would’ve been ideal. Now freelancing confidently!"

Big Data and Hadoop Course FAQ's

Big Data refers to large, complex data sets that traditional tools can’t handle efficiently. Hadoop is an open-source framework used to process these massive datasets in a distributed environment.

A Big Data Developer builds and manages large-scale data processing systems using tools like Hadoop, Hive, and MapReduce. They work with structured and unstructured data, designing systems for performance and scalability.

- Industry-ready skills

- Hands-on experience

- Prepares you for high-paying Big Data roles

- 40 hours of instructor-led sessions

- Online classes with weekday/weekend options

- Certification on completion

- Submit the form you will get a call for Enrolment Process

- Entry-level and mid-level Big Data roles in MNCs and startups

- Salary uplift through certification

- IT & Software

- E-commerce

- Healthcare

- Finance

- Telecommunications

- Media & Entertainment

- Oil & Gas

Other Related Programs

Data Science with Python

Spark and Scala for Big Data

Advanced SQL & Data Warehousing

Machine Learning Fundamentals

- Flat 51, Bldg. 1301, Road 4526 R:, Block 340, Shabab Avenue, Juffair, Bahrain

- Phone: +973 3651 7061

- Email: gcc@systemskills.com

- Open Time: Saturday To Thursday: 8:30 AM – 5:30 PM (Friday: Off).

Follow us:

Copyright © 2025 All Right Reserved | Website Developed by Digital Mogli LLP